The aim of the project is to make the car drive autonomously on a track without crashing the walls and other objects placed on the track. The only information that the autopilot has is images obtained from a camera placed in front of the car.

The work done consists of a cycle of data collection, training models, testing the model, and diagnosing the issues. Since we are still in the first iteration of the project, we still haven’t done any troubleshooting, so there was no improvement in the process of data collection and training.

This report contains some preliminary descriptions of the work done and some lessons learned so far. Because of connection issues, we didn’t collect our own data and we had available data from the previous competition with a different car and data from the DonkeyCar, but on a different track.

1. Collecting Data

The data collection phase is crucial for the success of the autopilot. Although the amount of data can improve the model, the quality should not be neglected and some care must be taken. When data is being collected, the DonkeyCar’s camera records images at 20 frames per second and the corresponding steering angle and throttle. Other data is also collected, like timestamp or a session ID, but these data will not be used here.

Because the data is collected by ourselves, this gives us a lot of flexibility. Many different things will be tried along with the project, like different settings of the track, different driving styles, and so on. This means that we will have extra work with data collection, but on the other hand, we can better understand and know the data that we are working with and this helps to identify and fix issues related to the data that we would not mention if we had a predefined dataset. It also gives us insights while troubleshooting the models.

The images seen by the human driver and the car are completely different. The human has much more information about the circuit than the car sees with the camera and this difference should be kept in mind while collecting data. For example, when a difficult track approaches, the human might reduce the speed, while the camera of the car barely can see the first turn. Another example is when a human decides to perform a wide turn because he can see the full track and “sketch” a trajectory in his mind, while the car camera barely can see the turn.

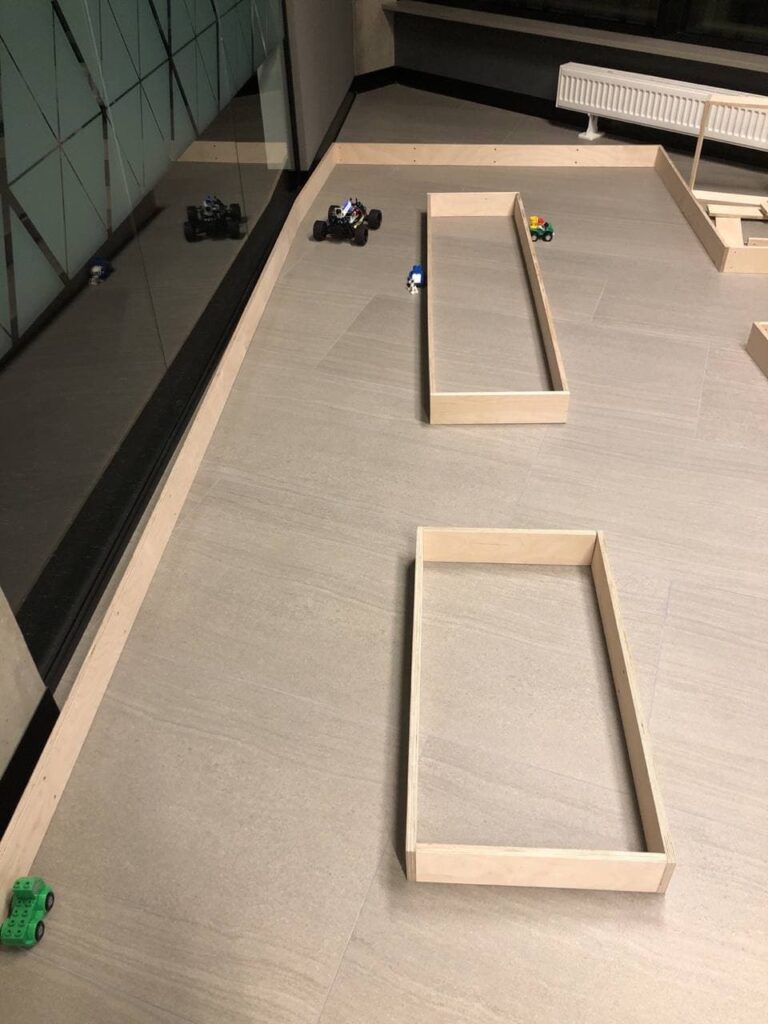

Another thing to have in mind is the light conditions. Since the track is close to a big window, natural lightning will influence the images. Because of that, one wants to make sure that data is collected in different conditions, or at least that the data collection will take place when the luminosity will be similar to the conditions when the self-driving model will be deployed.

Because the competition does not allow any crashes, we did not bother collecting data of crash recoveries (driving backward and then correcting the steering). These crashes are very hard to avoid, especially for inexperienced drivers, thus data cleaning is necessary. One possibility for data cleaning is using the command “donkey tubclean <folder containing tubs>”. This command will start a web app with an interface for cutting the videos. Cleaning the data by just checking a single image is very hard to decide whether the image should be kept or not, while with a video, we can visualize where the car is coming from and where it is going, thus we can identify when wrong steering was applied.

Also, because data can be easily obtained (once the setup is done), data augmentation techniques were not explored.

One thing that should be kept in mind is that the data should try to represent all possible situations. Because the autonomous pilot makes mistakes, the car might end up in situations not seen by the data before as is shown in the images below. On the left, the car is facing a corner and this situation is new for the model. A human would start steering before it ends in this kind of situation. On the right, it is similar. The car is facing a wall and, although it can be seen that the car should turn right, this situation is new for the AI model because the human would aim for more smooth driving and would start the turn before. Although these images don’t correspond to the actual DonkeyCar, the same issues will be found when we test our models. Because of this reason, it is not desirable to have only data where the driver performs perfectly all the time. Doing so, the AI model will do a small mistake and it will get stuck in unprecedented situations, thus leading to a crash. When collecting the data we will use on our model, we will make sure that we record situations when the car is too close to the wall, for example, so the AI will know how to proceed in such situations.

2. Image processing

The data we are using for training is the image captured from the raspi camera on the donkey car. The trained model will be deciding what decision to make from the existence of the wall in the captured image data. Therefore, we only need the information of the wall which is the lower half of the camera view. We will cut down the upper half of the collected data so that it will be more efficient and faster to train models.

The following images show some examples of frames collected by the DonkeyCar. The upper part of the image contains no useful information for the decision-making process and it contains information that might distract the AI model, like reflections and other objects. Also, some pre-trained networks (e.g. MobileNet, VGG-19, GoogLeNet) will be used in the process and this requires resizing the images. This can easily be done with OpenCV or other image processing libraries.

3. Training the model

By default, the DonkeyCar supports standard TensorFlow h5 models, tf-lite models, TensorRT models (Nvidia Jetson Nano), and Google Coral Edge TPU models. Because of familiarity with TensorFlow, we opted for training TensorFlow h5 models.

For the baseline model, we tried to keep the throttle constant and predict the steering using classification. Three classes were used, straight, left, and right. This way, the problem is simplified. Later on, regression models will be tried as well, since it is desirable to have different levels of steering for more smooth driving.

The training is being done on Colab notebooks and the metrics used are accuracy and loss. Although these metrics don’t represent the real-world deployment of the model, it is a good start before we have the opportunity to test with the real car.

A big difference between human drivers and AI-model based on single frames is the amount of information to make the decision. Although we can not make the AI see future turns like a human, we can use the information from previous frames to help the decision process. In other words, we will try models with a couple of frames stacked together and it is expected to produce better results.

4. Testing

Comparing different models requires some metrics. The most performant models are not necessarily the ones with the highest accuracy on the test data set. While dealing with different models, it is really hard to decide which direction to take without deploying the model and seeing the real performance. In our case, the metrics to evaluate the models are aligned to the competition goals and we are interested in how much we can increase the throttle before the car starts to crash, since in the competition the autopilot has to drive as fast as possible.

We did not test any model, so we hope that our assumptions are good!